Cache memory performance. The impact of cache memory capacity on the performance of the third generation Core i5. How to find out the current cache size

Today's article is not an independent material - it simply continues the study of the performance of three generations of the Core architecture under equal conditions (started at the end of last year and continued recently). True, today we will take a small step to the side - the frequencies of the cores and cache memory will remain the same as before, but the capacity of the latter will decrease. Why is this necessary? We used the “full” Core i7 of the last two generations for the purity of the experiment, testing it with support for Hyper-Threading technology enabled and disabled, since for a year and a half now Core i5 has been equipped with not 8, but 6 MiB L3. It is clear that the impact of cache memory capacity on performance is not as great as is sometimes believed, but it is there, and there is no escape from it. In addition, Core i5 are more mass-produced products than Core i7, and in the first generation no one “offended” them in this regard. But before they were limited a little differently: clock frequency UnCore in the first generation i5 was only 2.13 GHz, so our "Nehalem" is not exactly a 2.4 GHz 700-series processor, but a slightly faster processor. However, we considered it unnecessary to greatly expand the list of participants and redo the testing conditions - all the same, as we have warned more than once, testing this line does not provide any new practical information: real processors operate in completely different modes. But for those who want to thoroughly understand all the subtle points, we think such testing will be interesting.

Test bench configuration

We decided to limit ourselves to just four processors, and there will be two main participants: both quad-core Ivy Bridge, but with different third-level cache capacities. The third is “Nehalem HT”: last time, in terms of the final score, it turned out to be almost identical to “Ivy Bridge Simply”. And “simply Nehalem” which, as we have already said, is a little faster than the real first-generation Core i5 operating at 2.4 GHz (due to the fact that in the 700 line the UnCore frequency was slightly lower), but not too radical. But the comparison is interesting: on the one hand, there are two steps to improve the microarchitecture, on the other, the cache memory has been limited. A priori, we can assume that the first will outweigh in most cases, but how much and in general - how comparable are the “first” and “third” i5s (adjusted for the UnCore frequency, of course, although if there are many people who want to see an absolutely accurate comparison, we will do it later let's do it) is already a good topic for research.

Testing

Traditionally, we divide all tests into a number of groups and show on diagrams the average result for a group of tests/applications (you can find out more about the testing methodology in a separate article). The results in the diagrams are given in points; the performance of the reference test system from the 2011 sample site is taken as 100 points. It is based on AMD processor Athlon II X4 620, but the amount of memory (8 GB) and video card () are standard for all tests of the “main line” and can only be changed within the framework of special studies. Those who are interested in more detailed information are again traditionally invited to download a table in Microsoft Excel format, in which all the results are presented both converted into points and in “natural” form.

Interactive work in 3D packages

There is some effect of cache capacity, but it is less than 1%. Accordingly, both Ivy Bridges can be considered identical to each other, and architectural improvements allow the new Core i5 to easily outperform the old Core i7 in the same way as the new Core i7 does.

Final rendering of 3D scenes

In this case, of course, no improvements can compensate for the increase in the number of processed threads, but today the most important thing for us is not this, but the complete lack of influence of cache memory capacity on performance. Celeron and Pentium, as we have already established, are different processors, so rendering programs are sensitive to L3 capacity, but only when the latter is insufficient. And 6 MiB for four cores, as we see, is quite enough.

Packing and Unpacking

Naturally, these tasks are susceptible to cache memory capacity, however, here too the effect of increasing it from 6 to 8 MiB is quite modest: approximately 3.6%. More interesting, in fact, is the comparison with the first generation - architectural improvements allow the new i5 to smash even the old i7 at equal frequencies, but this is in the overall standings: due to the fact that two of the four tests are single-threaded, and another is dual-threaded. Data compression using 7-Zip is naturally fastest on Nehalem HT: eight threads are always faster than four with comparable performance. But if we limit ourselves to just four, then our “Ivy Bridge 6M” loses not only to its progenitor, but also to the old Nehalem: microarchitecture improvements completely give in to the reduction in cache memory capacity.

Audio encoding

What was somewhat unexpected was not the size of the difference between the two Ivy Bridges, but the fact that there was any difference at all. The truth is so cheap that it can be attributed to rounding or measurement errors.

Compilation

Threads are important, but so is cache capacity. However, as usual, not too much - about 1.5%. A more interesting comparison is with the first generation Core with Hyper-Threading disabled: “on points” the new Core i5 wins even at the same frequency, but one of the three compilers (made by Microsoft, to be precise) worked on both processors in the same amount of time. Even with a 5 second advantage for the older one - despite the fact that in this program the “full cache” Ivy Bridge results are 4 seconds better than Nehalem. In general, here we cannot assume that the reduction in L3 capacity somehow greatly affected the second and third generation Core i5, but there are some nuances.

Mathematical and engineering calculations

Again, less than 1% difference with the “older” crystal and again a convincing victory over the first generation in all its forms. Which is more the rule than the exception for such low-threaded tests, but why not make sure of it once again? Especially in such a refined form, when (unlike tests in normal mode) the difference in frequencies (“standard” or appearing due to Turbo Boost) does not interfere.

Raster graphics

But even with a more complete utilization of multithreading, the picture does not always change. And the cache memory capacity does not give anything at all.

Vector graphics

And it’s the same here. True, only a couple of computation threads are needed.

Video encoding

Unlike this group, where, however, even Hyper-Threading does not allow Nehalem to fight on equal terms with the followers of newer generations. But they are not too hampered by the reduction in cache memory capacity. More precisely, it practically does not interfere at all, since the difference is again less than 1%.

Office software

As one might expect, there is no performance gain from increasing the cache memory capacity (more precisely, there is no drop from decreasing it). Although if you look at the detailed results, you can see that the only multi-threaded test in this group (namely text recognition in FineReader) runs about 1.5% faster with 8 MiB L3 than with 6 MiB. It would seem - what is 1.5%? From a practical point of view - nothing. But from a research point of view it is already interesting: as we see, it is multi-threaded tests that most often lack cache memory. As a result, the difference (albeit small) is sometimes found even where it seems like it shouldn’t be. Although there is nothing so inexplicable about this - roughly speaking, in low-threaded tests we have 3-6 MiB per thread, but in multi-threaded tests it turns out to be 1.5 MiB. There is a lot of the first, but the second may not be quite enough.

Java

However, the Java machine does not agree with this assessment, but this is also understandable: as we have written more than once, it is very well optimized not for x86 processors at all, but for phones and coffee makers, where there can be a lot of cores, but the cache is very little memory. And sometimes there are few cores and cache memory - expensive resources both in terms of chip area and power consumption. And, if it’s possible to do something with cores and megahertz, then with the cache everything is more complicated: in the quad-core Tegra 3, for example, there is only 1 MiB. It is clear that the JVM can “squeeze” more (like all bytecode systems), which we have already seen when comparing Celeron and Pentium, but more than 1.5 MiB per thread, if it can be useful, is not for those tasks. which were included in SPECjvm 2008.

Games

We had high hopes for games, since they often turn out to be more demanding of cache memory capacity than even archivers. But this happens when there is very little of it, and 6 MiB, as we see, is enough. And, again, quad-core Core processors of any generation, even at a frequency of 2.4 GHz, are too powerful a solution for the gaming applications used, so the bottleneck will clearly not be them, but other components of the system. Therefore, we decided to dust off modes with low graphics quality - it is clear that for such systems it is too synthetic, but we also do all testing synthetically :)

When all sorts of video cards and so on don’t interfere, the difference between the two Ivy Bridges reaches an “insane” 3%: in this case, you can ignore it in practice, but in theory it’s a lot. More came out just in archivers.

Multitasking environment

We've already seen this somewhere. Well, yes - when we tested six-core processors under LGA2011. And now the situation repeats itself: the load is multi-threaded, some of the programs used are “greedy” for the cache memory, but increasing it only reduces the average performance. How can this be explained? Except that arbitration becomes more complicated and the number of mistakes increases. Moreover, we note that this only happens when the L3 capacity is relatively large and there are at least four simultaneously working computation threads - in the budget segment the picture is completely different. In any case, as our recent testing of Pentium and Celeron showed, for dual-core processors, increasing L3 from 2 to 3 MiB adds 6% performance. But it doesn’t give anything to four- and six-core ones, to put it mildly. Even less than nothing.

Total

The logical overall result: since no significant difference was found anywhere between processors with different L3 sizes, there is none “in general.” Thus, there is no reason to be upset about the reduction in cache memory capacity in the second and third generations of Core i5 - the predecessors of the first generation are not competitors to them anyway. And older Core i7s, on average, also demonstrate only a similar level of performance (of course, mainly due to the lag in low-threaded applications - and there are scenarios that, under equal conditions, they handle faster). But, as we have already said, in practice real processors are far from being on equal terms in terms of frequencies, so the practical difference between generations is greater than can be obtained in such studies.

Only one question remains open: we had to greatly reduce the clock frequency to ensure equal conditions with the first generation Core, but will the observed patterns persist in conditions closer to reality? After all, just because four low-speed computation threads do not see the difference between 6 and 8 MiB of cache memory, it does not follow that it will not be detected in the case of four high-speed ones. True, the opposite does not follow, so in order to finally close the topic of theoretical research, we will need one more laboratory work, which we will do next time.

What is processor cache?

A cache is a part of memory that provides maximum speed access and speeds up the calculation speed. It stores the pieces of data that the processor requests most often, so that the processor does not need to constantly access system memory for them.

As you know, this is a part of computer equipment that is characterized by the slowest data exchange speeds. If the processor needs some information, it goes to the RAM via the bus of the same name for it. Having received a request from the processor, it begins to delve into its annals in search of the data the processor needs. Upon receipt, the RAM sends them back to the processor along the same memory bus. This circle for data exchange was always too long. Therefore, the manufacturers decided that they could allow the processor to store data somewhere nearby. The way the cache works is based on a simple idea.

Think of memory as a school library. The student approaches the employee for a book, she goes to the shelves, looks for it, returns to the student, prepares it properly and proceeds to the next student. At the end of the day, he repeats the same operation when the books are returned to her. This is how a processor without a cache works.

Why does the processor need a cache?

Now imagine that the librarian is tired of constantly rushing back and forth with books that are constantly demanded from her year after year, day after day. He acquired a large cabinet where he stores the most frequently requested books and textbooks. The rest that have been placed, of course, continue to be stored on the same shelves. But these are always at hand. How much time he saved with this cabinet, both for himself and for the others. This is the cache.

So, the cache can only store the most required data?

Yes. But he can do more. For example, having already stored frequently required data, it is able to assess (with the help of the processor) the situation and request information that is about to be needed. So, a video rental customer who requested the movie “Die Hard” with the first part will most likely ask for the second. And here she is! The same goes for the processor cache. By accessing RAM and storing certain data, it also retrieves data from neighboring memory cells. Such pieces of data are called cache lines.

What is a two-level cache?

A modern processor has two levels. Accordingly, the first and second. They are designated by the letter L from the English Level. The first - L1 - is faster, but is small in volume. The second - L2 - is a little larger, but slower, but faster than RAM. The first level cache is divided into an instruction cache and a data cache. The instruction cache stores the set of instructions that the processor needs for calculations. Whereas the data cache stores quantities or values needed for the current calculation. And the second level cache is used to load data from the computer's RAM. The working principle of cache levels can also be explained using a school library example. So, having filled the purchased cabinet, the librarian realizes that there is no longer enough for books, for which he constantly has to run around the hall. But the list of such books has been finalized, and you need to buy the same cabinet. He didn’t throw away the first one - it’s a pity - and simply bought the second one. And now, as the first one is filled, the librarian begins to fill the second one, which comes into play when the first one is full, but the necessary books do not fit into it. It's the same with cache levels. And as microprocessor technology develops, processor cache levels grow in size.

Will the cache continue to grow?

Hardly. The pursuit of processor frequency also did not last long, and manufacturers found other ways to increase power. Same with the cache. Specifically speaking, the volume and number of levels cannot be inflated endlessly. The cache should not turn into another stick of RAM with slow access speed or reduce the size of the processor to half the size of the motherboard. After all, the speed of data access is, first of all, energy consumption and the performance cost of the processor itself. Cache misses (as opposed to cache hits), where the processor accesses cached memory for data that is not there, have also become more frequent. The data in the cache is constantly updated using various algorithms to increase the probability of a cache hit.

Read: 644

A cache is a fast-access intermediate buffer containing information that is most likely to be requested. Accessing data in the cache is faster than retrieving original data from operational memory (RAM) and faster than external memory (hard drive or solid-state drive), thereby reducing the average access time and increasing the overall performance of the computer system.

A number of central processing unit (CPU) models have their own cache in order to minimize access to random access memory (RAM), which is slower than registers. Cache memory can provide significant performance benefits when the RAM clock speed is significantly lower than the CPU clock speed. The clock speed for cache memory is usually not much less than the CPU speed.

Cache levels

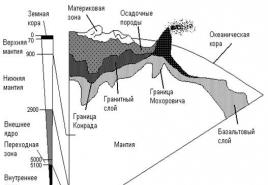

The CPU cache is divided into several levels. In a general-purpose processor today, the number of levels can be as high as 3. Level N+1 cache is typically larger in size and slower in access speed and data transfer than level N cache.

The fastest memory is the first level cache - L1-cache. In fact, it is an integral part of the processor, since it is located on the same chip and is part of the functional blocks. In modern processors, the L1 cache is usually divided into two caches, the instruction cache and the data cache (Harvard architecture). Most processors without L1 cache cannot function. The L1 cache operates at the processor frequency, and, in general, can be accessed every clock cycle. It is often possible to perform multiple read/write operations simultaneously. Access latency is usually 2–4 core clock cycles. The volume is usually small - no more than 384 KB.

The second fastest is L2-cache - a second-level cache, usually located on the chip, like L1. In older processors, a set of chips on the motherboard. L2 cache volume from 128 KB to 1?12 MB. In modern multi-core processors, the second level cache, located on the same chip, is a separate memory - with a total cache size of nM MB, each core has nM/nC MB, where nC is the number of processor cores. Typically, the latency of the L2 cache located on the core chip is from 8 to 20 core clock cycles.

The third level cache is the least fast, but it can be very impressive in size - more than 24 MB. L3 cache is slower than previous caches, but still significantly faster than RAM. In multiprocessor systems it is in common use and is intended for synchronizing data from different L2s.

Sometimes there is also a 4th level cache, usually it is located in a separate chip. The use of Level 4 cache is justified only for high-performance servers and mainframes.

The problem of synchronization between different caches (both one and multiple processors) is solved by cache coherence. There are three options for exchanging information between caches of different levels, or, as they say, cache architectures: inclusive, exclusive and non-exclusive.

Welcome to GECID.com! It is well known that the clock speed and number of processor cores directly affect the level of performance, especially in multi-threaded designs. We decided to check what role the L3 cache plays in this?

To study this issue, we were kindly provided by the online store pcshop.ua with a 2-core processor with a nominal operating frequency of 3.7 GHz and 3 MB of L3 cache with 12 associative channels. The opponent was a 4-core one, with two cores disabled and the clock frequency reduced to 3.7 GHz. Its L3 cache size is 8 MB, and it has 16 associative channels. That is, the key difference between them lies precisely in the last level cache: the Core i7 has 5 MB more of it.

If this significantly affects performance, then it will be possible to conduct another test with a representative of the Core i5 series, which has 6 MB of L3 cache on board.

But for now let's return to the current test. A video card and 16 GB of DDR4-2400 MHz RAM will help participants. We will compare these systems in Full HD resolution.

First, let's start with out-of-sync live gameplay, in which it is impossible to clearly determine the winner. IN Dying Light At maximum quality settings, both systems show a comfortable FPS level, although the processor and video card load on average was higher in the case of Intel Core i7.

Arma 3 has a pronounced processor dependence, which means a larger amount of cache memory should play a positive role even at ultra-high graphics settings. Moreover, the load on the video card in both cases reached a maximum of 60%.

A game DOOM at ultra-high graphics settings it allowed synchronizing only the first few frames, where the advantage of the Core i7 is about 10 FPS. The desynchronization of further gameplay does not allow us to determine the degree of influence of the cache on the speed of the video sequence. In any case, the frequency was kept above 120 frames/s, so even 10 FPS does not have much impact on the comfort of play.

Completes the mini-series of live gameplays Evolve Stage 2. Here we would probably see a difference between the systems, since in both cases the video card is approximately half loaded. Therefore, it subjectively seems that the FPS level in the case of the Core i7 is higher, but it is impossible to say for sure, since the scenes are not identical.

Benchmarks provide a more informative picture. For example, in GTA V You can see that outside the city the advantage of 8 MB cache reaches 5-6 frames/s, and in the city - up to 10 FPS due to the higher load on the video card. At the same time, the video accelerator itself in both cases is far from being loaded to its maximum, and everything depends on the CPU.

The Third Witcher we launched with extreme graphics settings and a high post-processing profile. In one of the scripted scenes, the advantage of the Core i7 in some places reaches 6-8 FPS with a sharp change in angle and the need to load new data. When the load on the processor and video card reaches 100% again, the difference decreases to 2-3 frames.

Maximum graphic settings preset in XCOM 2 was not a serious test for both systems, and the frame rate was around 100 FPS. But here, too, a larger amount of cache memory was transformed into an increase in speed from 2 to 12 frames/s. And although both processors failed to load the video card to the maximum, the 8 MB version was even better in this regard in some places.

The game surprised me the most Dirt Rally, which we launched with the preset very high. At certain moments the difference reached 25 fps solely due to the larger L3 cache. This allowed the video card to be loaded 10-15% better. However, the average benchmark results showed a more modest victory for the Core i7 - only 11 FPS.

An interesting situation has arisen with Rainbow Six Siege: on the street, in the first frames of the benchmark, the advantage of the Core i7 was 10-15 FPS. Indoors, the processor and video card load in both cases reached 100%, so the difference decreased to 3-6 FPS. But at the end, when the camera went outside the house, the Core i3 lag again exceeded 10 fps in places. The average figure turned out to be 7 FPS in favor of 8 MB of cache.

The Division with maximum graphics quality, it also responds well to an increase in cache memory. Already the first frames of the benchmark fully loaded all the Core i3 threads, but the total load on the Core i7 was 70-80%. However, the difference in speed at these moments was only 2-3 FPS. A little later, the load on both processors reached 100%, and at certain moments the difference was already behind the Core i3, but only by 1-2 frames/s. On average, it was about 1 FPS in favor of the Core i7.

In turn, the benchmarkRise of Tomb Riderat high graphics settings in all three test scenes, it clearly showed the advantage of a processor with a significantly larger amount of cache memory. Its average performance is 5-6 FPS better, but if you carefully look at each scene, in some places the lag of the Core i3 exceeds 10 frames/s.

But when choosing a preset with very high settings, the load on the video card and processors increases, so in most cases the difference between systems is reduced to a few frames. And only for a short time can Core i7 show more significant results. The average indicators of its advantage according to the benchmark results decreased to 3-4 FPS.

Hitman also less affected by L3 cache. Although here, with an ultra-high detail profile, an additional 5 MB provided better loading of the video card, turning this into an additional 3-4 frames/s. They don’t have a particularly critical impact on performance, but for purely sporting reasons, it’s nice that there is a winner.

High graphics settings Deus ex: Mankind divided immediately demanded maximum computing power from both systems, so the difference at best was 1-2 frames in favor of the Core i7, as indicated by the average.

Running it again with the ultra-high preset loaded the video card even more, so the impact of the processor on the overall speed became even less. Accordingly, the difference in L3 cache had virtually no effect on the situation and the average FPS differed by less than half a frame.

Based on the testing results, it can be noted that the L3 cache memory does have an impact on gaming performance, but it only appears when the video card is not loaded at full capacity. In such cases, it would be possible to get a 5-10 FPS increase if the cache were increased by 2.5 times. That is, approximately it turns out that, all other things being equal, each additional MB of L3 cache memory adds only 1-2 FPS to the video display speed.

So, if we compare neighboring lines, for example, Celeron and Pentium, or models with different amounts of L3 cache memory within the Core i3 series, then the main performance increase is achieved due to higher frequencies, and then the presence of additional processor threads and cores. Therefore, when choosing a processor, first of all, you still need to focus on the main characteristics, and only then pay attention to the amount of cache memory.

That's all. Thank you for your attention. We hope this material was useful and interesting.

Article read 26737 times

| Subscribe to our channels | |||||

Almost all developers know that the processor cache is a small but fast memory that stores data from recently visited memory areas - the definition is short and quite accurate. However, knowing the boring details about the cache mechanisms is necessary to understand the factors that affect code performance.

In this article we will look at a number of examples illustrating various features caches and their impact on performance. The examples will be in C#; the choice of language and platform does not greatly affect the performance assessment and final conclusions. Naturally, within reasonable limits, if you choose a language in which reading a value from an array is equivalent to accessing a hash table, you will not get any interpretable results. Translator's notes are in italics.

Habracut - - -

Example 1: Memory Access and Performance

How much faster do you think the second cycle is than the first?int arr = new int;// first

for (int i = 0; i< arr.Length; i++) arr[i] *= 3;// second

for (int i = 0; i< arr.Length; i += 16) arr[i] *= 3;

The first loop multiplies all values in the array by 3, the second loop only multiplies every sixteenth value. The second cycle only completes 6% work the first cycle, but on modern machines both cycles are executed in approximately equal time: 80 ms And 78 ms respectively (on my machine).

The solution is simple - memory access. The speed of these loops is primarily determined by the speed of the memory subsystem, and not by the speed of integer multiplication. As we will see in the next example, the number of accesses to RAM is the same in both the first and second cases.

Example 2: Impact of Cache Lines

Let's dig deeper and try other step values, not just 1 and 16:for (int i = 0; i< arr.Length; i += K /* шаг */ ) arr[i] *= 3;

Here is the running time of this cycle for different meanings step K:

Please note that with step values from 1 to 16, the operating time remains virtually unchanged. But with values greater than 16, the running time decreases by about half every time we double the step. This does not mean that the loop somehow magically starts running faster, just that the number of iterations also decreases. Key moment- the same operating time with step values from 1 to 16.

The reason for this is that modern processors do not access memory one byte at a time, but rather in small blocks called cache lines. Typically the string size is 64 bytes. When you read any value from memory, at least one cache line gets into the cache. Subsequent access to any value from this row is very fast.

Because 16 int values occupy 64 bytes, loops with steps from 1 to 16 access the same number of cache lines, or more precisely, all cache lines of the array. At step 32, access occurs to every second line, at step 64, to every fourth.

Understanding this is very important for some optimization techniques. The number of accesses to it depends on the location of the data in memory. For example, unaligned data may require two accesses to main memory instead of one. As we found out above, the operating speed will be two times lower.

Example 3: Level 1 and 2 cache sizes (L1 and L2)

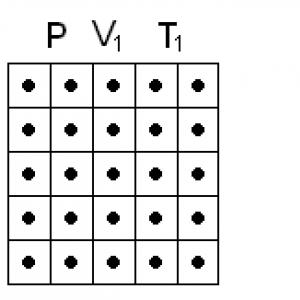

Modern processors typically have two or three levels of caches, usually called L1, L2, and L3. To find out the sizes of caches at different levels, you can use the CoreInfo utility or Windows function API GetLogicalProcessorInfo. Both methods also provide information about the cache line size for each level.On my machine, CoreInfo reports 32 KB L1 data caches, 32 KB L1 instruction caches, and 4 MB L2 data caches. Each core has its own personal L1 caches, L2 caches are shared by each pair of cores:

Logical Processor to Cache Map: *--- Data Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64 *--- Instruction Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64 -*-- Data Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64 -*-- Instruction Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64 **-- Unified Cache 0, Level 2, 4 MB, Assoc 16, LineSize 64 --*- Data Cache 2, Level 1, 32 KB, Assoc 8, LineSize 64 --*- Instruction Cache 2, Level 1, 32 KB, Assoc 8, LineSize 64 ---* Data Cache 3, Level 1, 32 KB, Assoc 8, LineSize 64 ---* Instruction Cache 3, Level 1, 32 KB, Assoc 8, LineSize 64 --** Unified Cache 1, Level 2, 4 MB, Assoc 16, LineSize 64

Let's check this information experimentally. To do this, let's go through our array, incrementing every 16th value - an easy way to change the data in each cache line. When we reach the end, we return to the beginning. Let's check different array sizes; we should see a drop in performance when the array no longer fits into caches of different levels.

The code is:

int steps = 64 * 1024 * 1024; // number of iterations

int lengthMod = arr.Length - 1; // array size -- power of twofor (int i = 0; i< steps; i++)

{

// x & lengthMod = x % arr.Length, because powers of two

arr[(i * 16) & lengthMod]++;

}

Test results:

On my machine, there are noticeable drops in performance after 32 KB and 4 MB - these are the sizes of the L1 and L2 caches.

Example 4: Instruction Parallelism

Now let's look at something else. In your opinion, which of these two loops will execute faster?int steps = 256 * 1024 * 1024;

int a = new int ;// first

for (int i = 0; i< steps; i++) { a++; a++; }// second

for (int i = 0; i< steps; i++) { a++; a++; }

It turns out that the second loop runs almost twice as fast, at least on all the machines I tested. Why? Because commands inside loops have different data dependencies. The first commands have the following chain of dependencies:

In the second cycle the dependencies are:

The functional parts of modern processors are capable of performing a certain number of certain operations simultaneously, usually not a very large number. For example, parallel access to data from the L1 cache at two addresses is possible, and simultaneous execution of two simple arithmetic instructions is also possible. In the first cycle, the processor cannot use these capabilities, but it can in the second.

Example 5: Cache Associativity

One of the key questions that must be answered when designing a cache is whether data from a certain memory region can be stored in any cache cells or only in some of them. Three possible solutions:- Direct Mapping Cache,The data of each cache line in RAM is stored in only one ,predefined cache location. The simplest way mapping calculations: row_index_in_memory % number of_cache_cells. Two lines mapped to the same cell cannot be in the cache at the same time.

- N-entry partial-associative cache, each line can be stored in N different cache locations. For example, in a 16-entry cache, a line may be stored in one of the 16 cells that make up the group. Typically, rows with equal least significant bits of indices share one group.

- Fully associative cache, any line can be stored in any cache location. The solution is equivalent to a hash table in its behavior.

For example, on my machine, the 4 MB L2 cache is a 16-entry partial-associative cache. The entire RAM is divided into sets of lines according to the least significant bits of their indices, lines from each set compete for one group of 16 L2 cache cells.

Since the L2 cache has 65,536 cells (4 * 2 20 / 64) and each group consists of 16 cells, we have a total of 4,096 groups. Thus, the lower 12 bits of the row index determine which group this row belongs to (2 12 = 4,096). As a result, rows with addresses that are multiples of 262,144 (4,096 * 64) share the same group of 16 cells and compete for space in it.

For the effects of associativity to take effect, we need to constantly access a large number of rows from the same group, for example, using the following code:

public static long UpdateEveryKthByte(byte arr, int K)

{

const int rep = 1024 * 1024; // number of iterationsStopwatch sw = Stopwatch.StartNew();

int p = 0;

for (int i = 0; i< rep; i++)

{

arr[p]++;P += K; if (p >= arr.Length) p = 0;

}Sw.Stop();

return sw.ElapsedMilliseconds;

}

The method increments every Kth element of the array. When we reach the end, we start again. After quite a large number of iterations (2 20), we stop. I made runs for different array sizes and K step values. Results (blue - long running time, white - short):

Blue areas correspond to those cases when, with constant data changes, the cache is not able to accommodate all required data at once. Bright Blue colour speaks of operating time of about 80 ms, almost white - 10 ms.

Let's deal with the blue areas:

- Why do vertical lines appear? Vertical lines correspond to step values at which too many rows (more than 16) from one group are accessed. For these values, my machine's 16-entry cache cannot accommodate all the necessary data.

Some of the bad stride values are powers of two: 256 and 512. For example, consider stride 512 and an 8 MB array. With this step, there are 32 sections in the array (8 * 2 20 / 262 144), which compete with each other for cells in 512 cache groups (262 144 / 512). There are 32 sections, but there are only 16 cells in the cache for each group, so there is not enough space for everyone.

Other step values that are not powers of two are simply unlucky, causing a large number of accesses to the same cache groups, and also leads to the appearance of vertical blue lines in the figure. At this point, lovers of number theory are invited to think.

- Why do vertical lines break at the 4 MB boundary? When the array size is 4 MB or less, the 16-entry cache behaves like a fully associative cache, that is, it can accommodate all the data in the array without conflicts. There are no more than 16 areas fighting for one cache group (262,144 * 16 = 4 * 2 20 = 4 MB).

- Why is there a big blue triangle at the top left? Because with a small step and a large array, the cache is not able to fit all the necessary data. The degree of cache associativity plays a secondary role here; the limitation is related to the size of the L2 cache.

For example, with an array size of 16 MB and a stride of 128, we access every 128th byte, thus modifying every second array cache line. To store every second line in the cache, you need 8 MB of cache, but on my machine I only have 4 MB.

Even if the cache were fully associative, it would not allow 8 MB of data to be stored in it. Note that in the already discussed example with a stride of 512 and an array size of 8 MB, we only need 1 MB of cache to store all the necessary data, but this is impossible due to insufficient cache associativity.

- Why left-hand side triangle gradually gaining its intensity? The maximum intensity occurs at a step value of 64 bytes, which is equal to the size of the cache line. As we saw in the first and second examples, sequential access to the same row costs almost nothing. Let's say, with a step of 16 bytes, we have four memory accesses for the price of one.

Since the number of iterations is the same in our test for any step value, a cheaper step results in less running time.

Cache associativity is an interesting thing that can manifest itself under certain conditions. Unlike the other problems discussed in this article, it is not so serious. It's definitely not something that requires constant attention when writing programs.

Example 6: False Cache Partitioning

On multi-core machines, you may encounter another problem - cache coherence. Processor cores have partially or completely separate caches. On my machine, the L1 caches are separate (as usual), and there are also two L2 caches shared by each pair of cores. The details may vary, but in general, modern multi-core processors have multi-level hierarchical caches. Moreover, the fastest, but also the smallest caches belong to individual cores.When one core modifies a value in its cache, other cores can no longer use the old value. The value in the caches of other cores must be updated. Moreover, must be updated the entire cache line, since caches operate on data at the row level.

Let's demonstrate this problem with the following code:

private static int s_counter = new int ;private void UpdateCounter(int position)

{

for (int j = 0; j< 100000000; j++)

{

s_counter = s_counter + 3;

}

}

If on my four-core machine I call this method with parameters 0, 1, 2, 3 simultaneously from four threads, then the running time will be 4.3 seconds. But if I call the method with parameters 16, 32, 48, 64, then the running time will be only 0.28 seconds.

Why? In the first case, all four values processed by threads at any given time are likely to end up in one cache line. Each time one core increments a value, it marks cache cells containing that value in other cores as invalid. After this operation, all other kernels will have to cache the line again. This makes the caching mechanism inoperable, killing performance.

Example 7: Hardware Complexity

Even now, when the principles of cache operation are no secret to you, the hardware will still give you surprises. Processors differ from each other in optimization methods, heuristics and other implementation subtleties.The L1 cache of some processors can access two cells in parallel if they belong to different groups, but if they belong to the same group, only sequentially. As far as I know, some can even access different quarters of the same cell in parallel.

Processors may surprise you with clever optimizations. For example, the code from the previous example about false cache sharing does not work on my home computer as intended - in the simplest cases the processor can optimize the work and reduce negative effects. If you modify the code a little, everything falls into place.

Here's another example of weird hardware quirks:

private static int A, B, C, D, E, F, G;private static void Weirdness()

{

for (int i = 0; i< 200000000; i++)

{

<какой-то код>

}

}

If instead<какой-то код>Substitute three different options, you can get the following results:

Incrementing fields A, B, C, D takes longer than incrementing fields A, C, E, G. What's even weirder is that incrementing fields A and C takes longer than fields A, C And E, G. I don’t know exactly what the reasons for this are, but perhaps they are related to memory banks ( yes, yes, with ordinary three-liter savings memory banks, and not what you thought). If you have any thoughts on this matter, please speak up in the comments.

On my machine, the above is not observed, however, sometimes there are abnormally bad results - most likely, the task scheduler makes its own “adjustments”.

The lesson to be learned from this example is that it is very difficult to completely predict the behavior of hardware. Yes, Can predict a lot, but you need to constantly confirm your predictions through measurements and testing.